💎 Test

Overview¶

By combining LLM abilities with static code analysis, the test tool generate tests for a selected component, based on the PR code changes.

It can be invoked manually by commenting on any PR:

analyze tool.

Example usage¶

Invoke the tool manually by commenting /test on any PR:

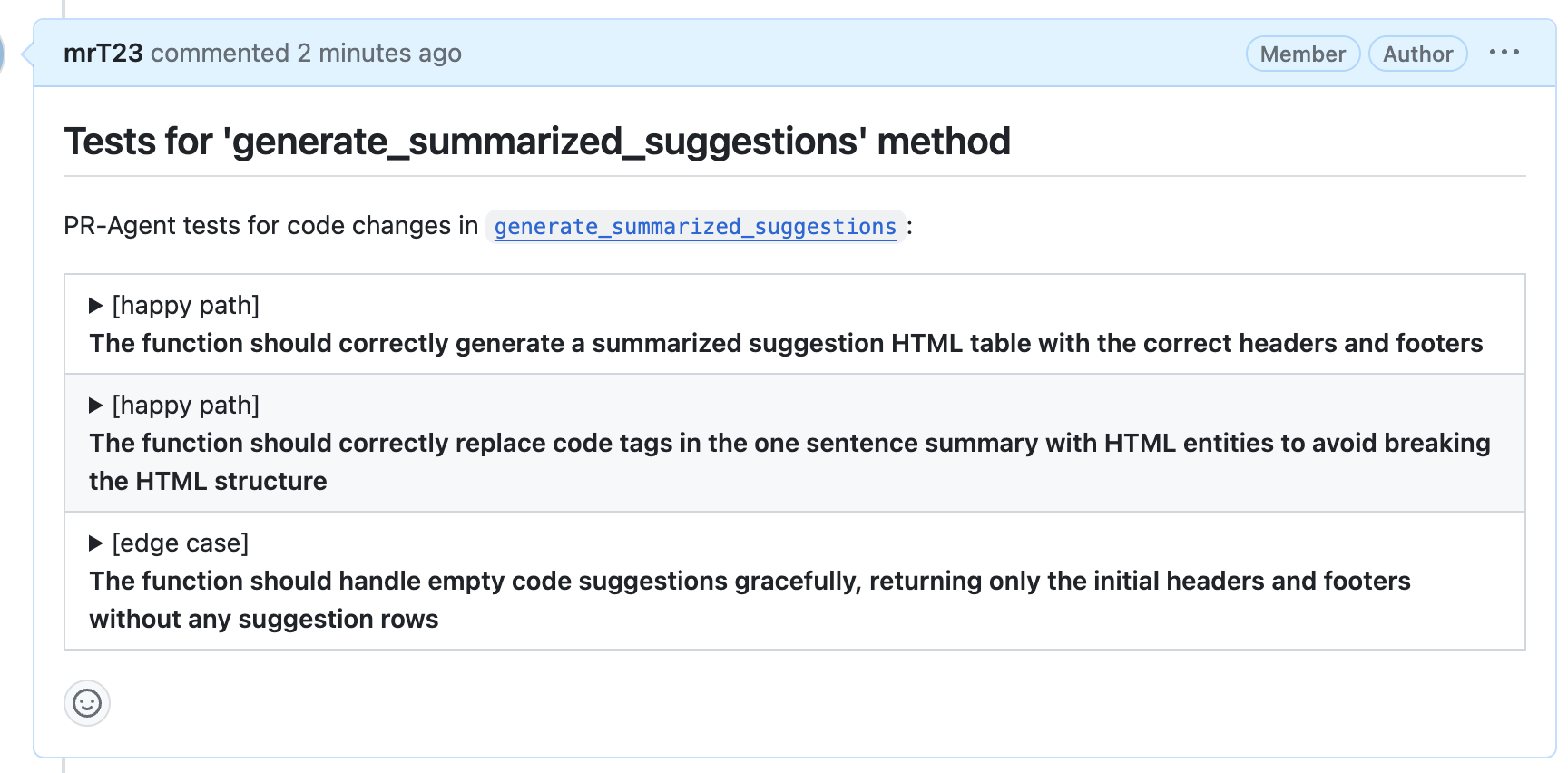

The tool will generate tests for the selected component (if no component is stated, it will generate tests for largest component):

(Example taken from here):

Notes

- Language that are currently supported by the tool: Python, Java, C++, JavaScript, TypeScript, C#.

- This tool can also be triggered interactively by using the analyze tool.

Configuration options¶

num_tests: number of tests to generate. Default is 3.testing_framework: the testing framework to use. If not set, for Python it will usepytest, for Java it will useJUnit, for C++ it will useCatch2, and for JavaScript and TypeScript it will usejest.avoid_mocks: if set to true, the tool will try to avoid using mocks in the generated tests. Note that even if this option is set to true, the tool might still use mocks if it cannot generate a test without them. Default is true.extra_instructions: Optional extra instructions to the tool. For example: "use the following mock injection scheme: ...".file: in case there are several components with the same name, you can specify the relevant file.class_name: in case there are several methods with the same name in the same file, you can specify the relevant class name.enable_help_text: if set to true, the tool will add a help text to the PR comment. Default is true.